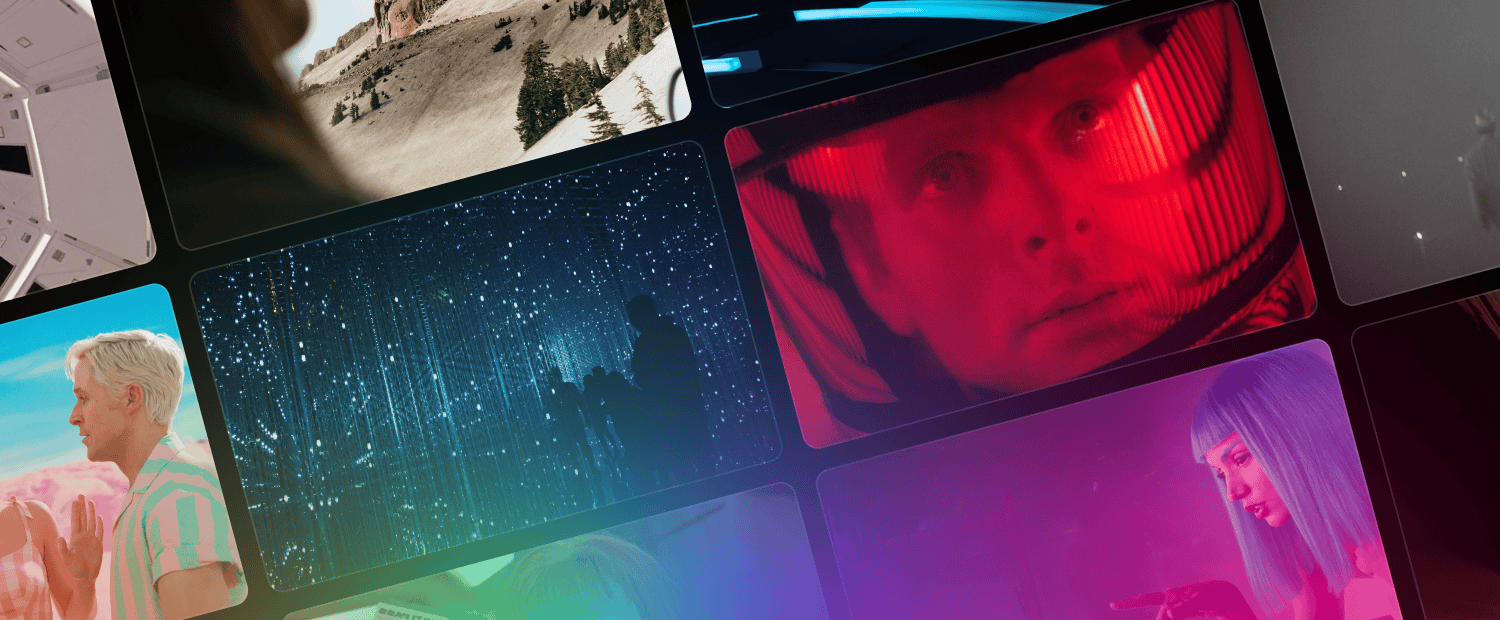

Our unique video search is the most powerful in the world. The longer name for it is Multimodal Universal Search Engine, and it has some important advantages over other types of search.

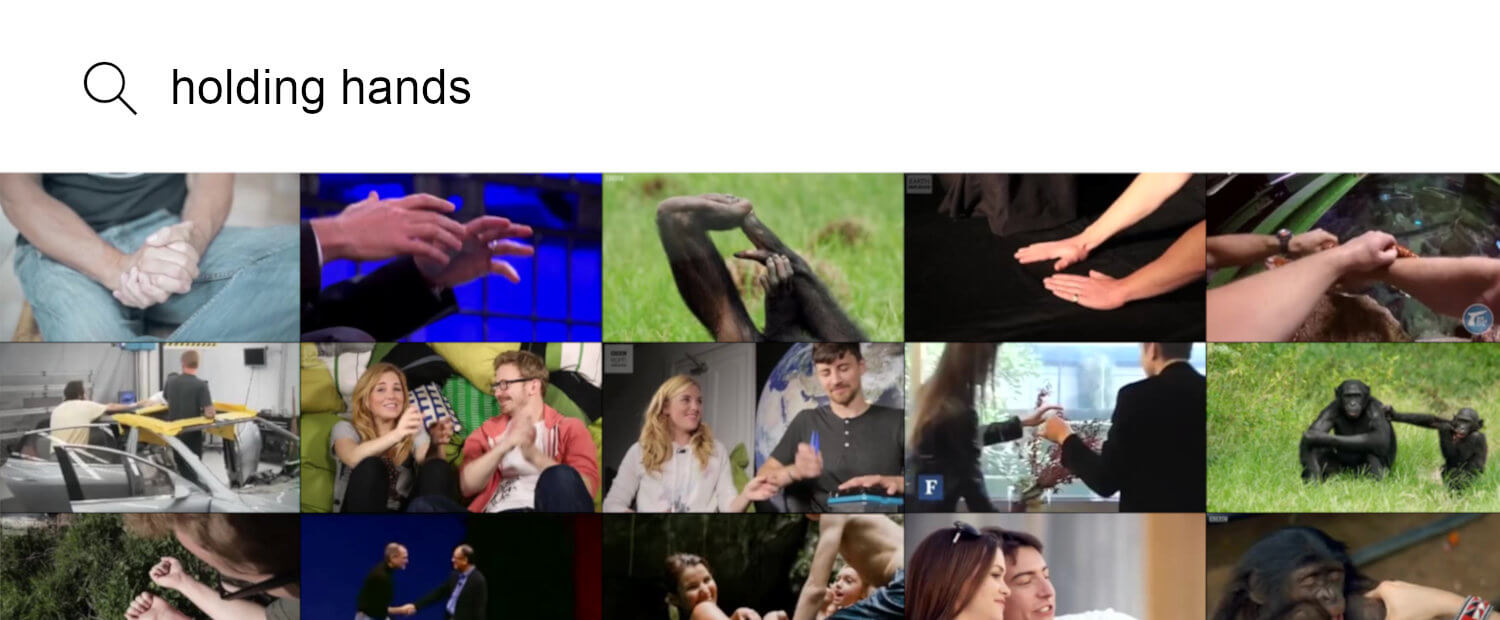

Humans experience and describe the world in several dimensions. We see, we listen, we feel, and when we describe a scene to someone, we talk about what could be seen, who was there, what they said, or did.

Before muse.ai introduced the Multimodal Universal Search Engine, video search engines were limited to data they were given, such as titles, descriptions, or tags. These summaries have been used to classify video, but fall short of taking into account all the modalities (e.g. sound and image) humans experience when watching a video.

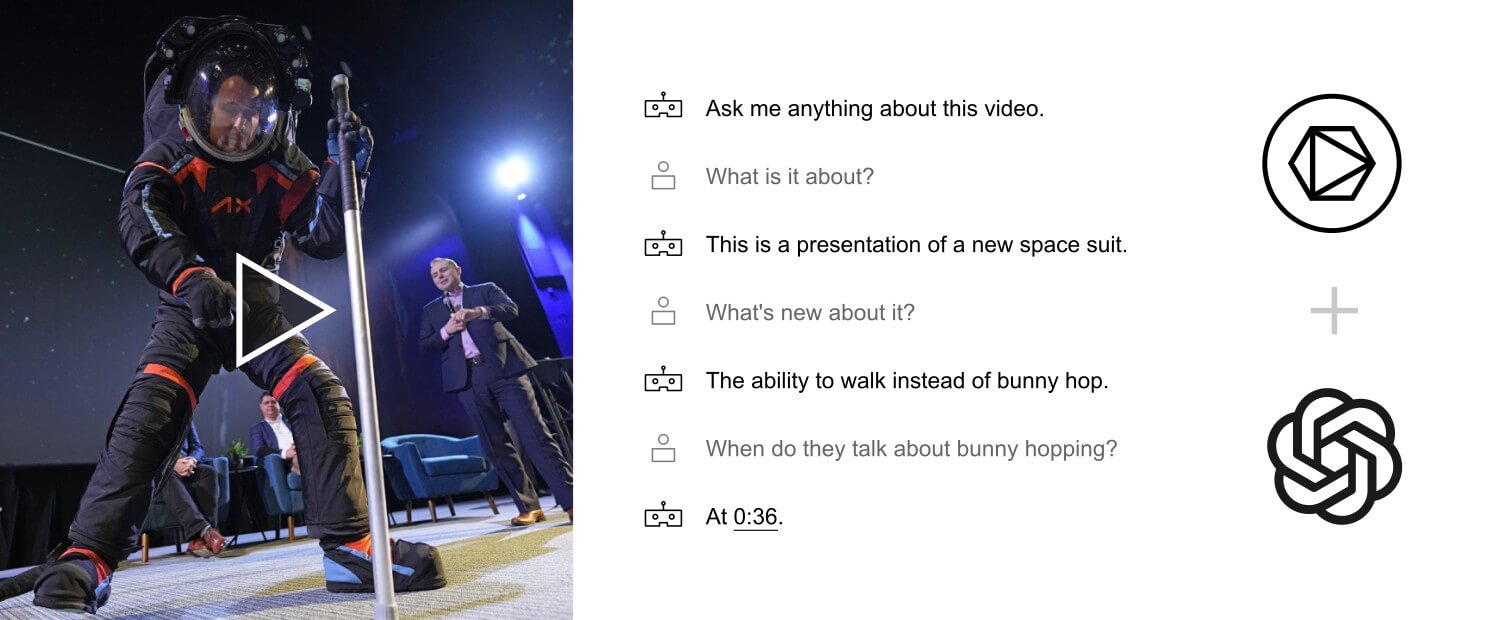

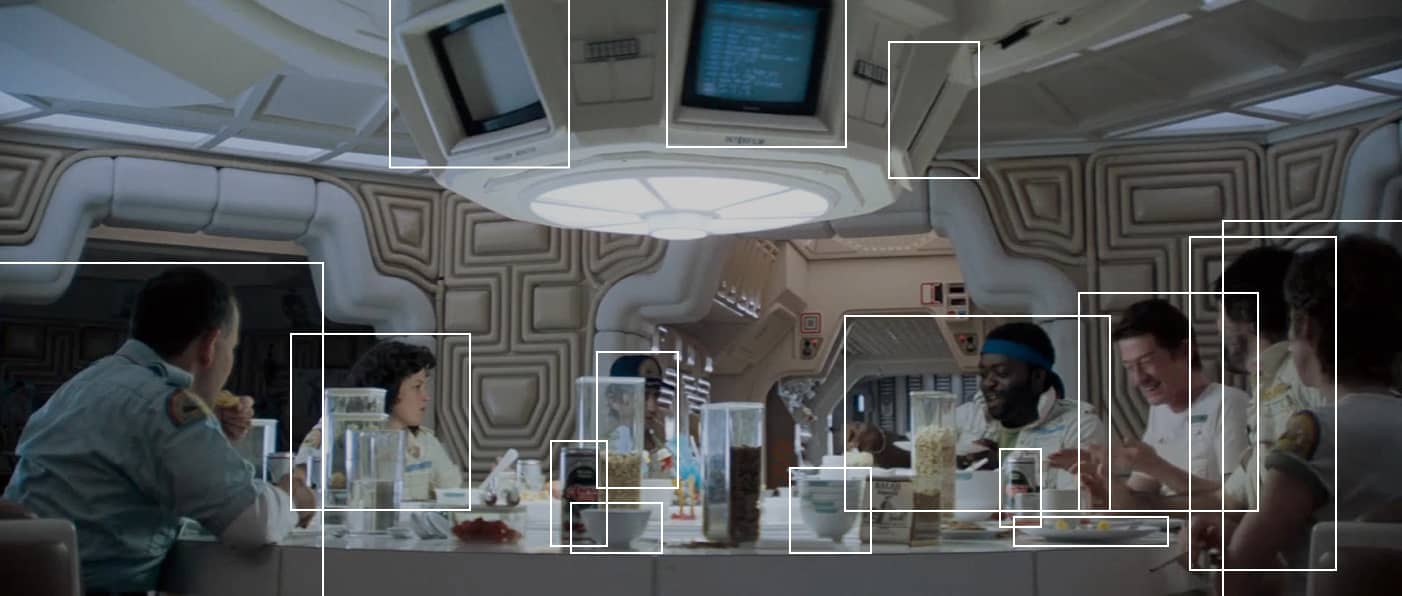

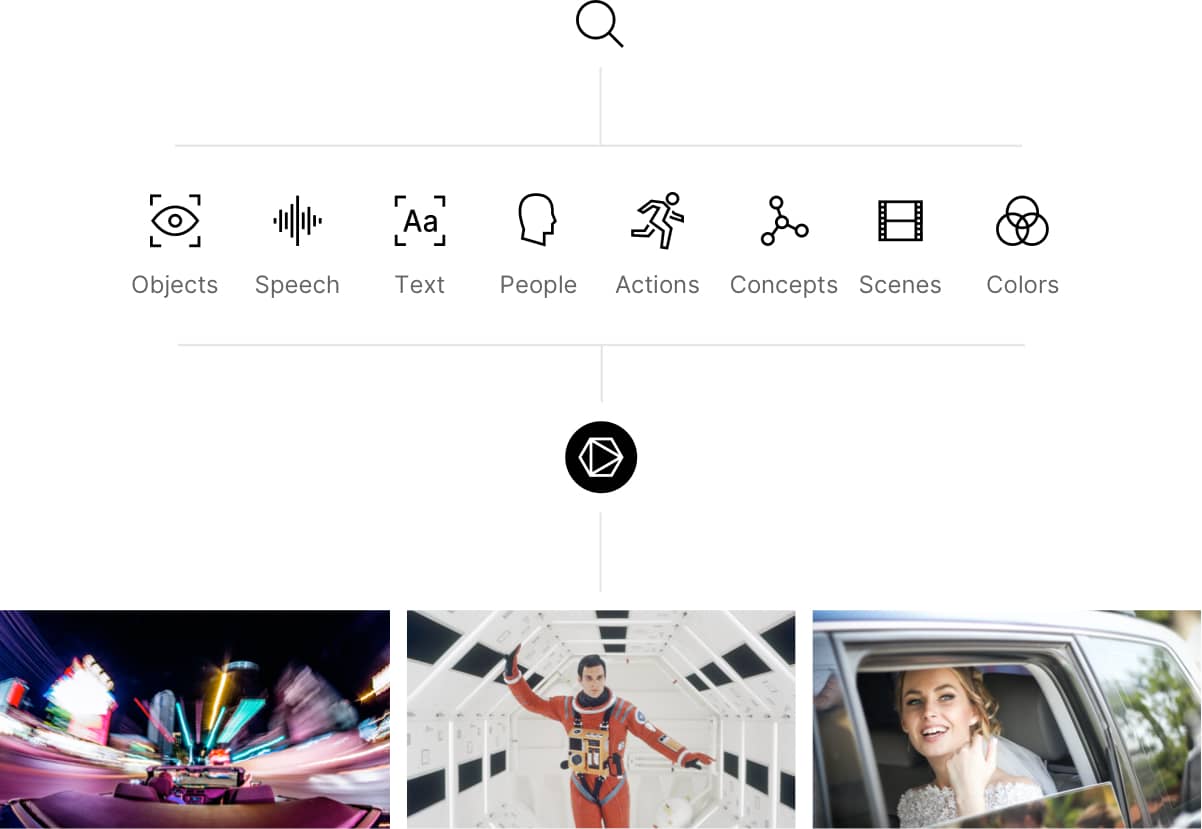

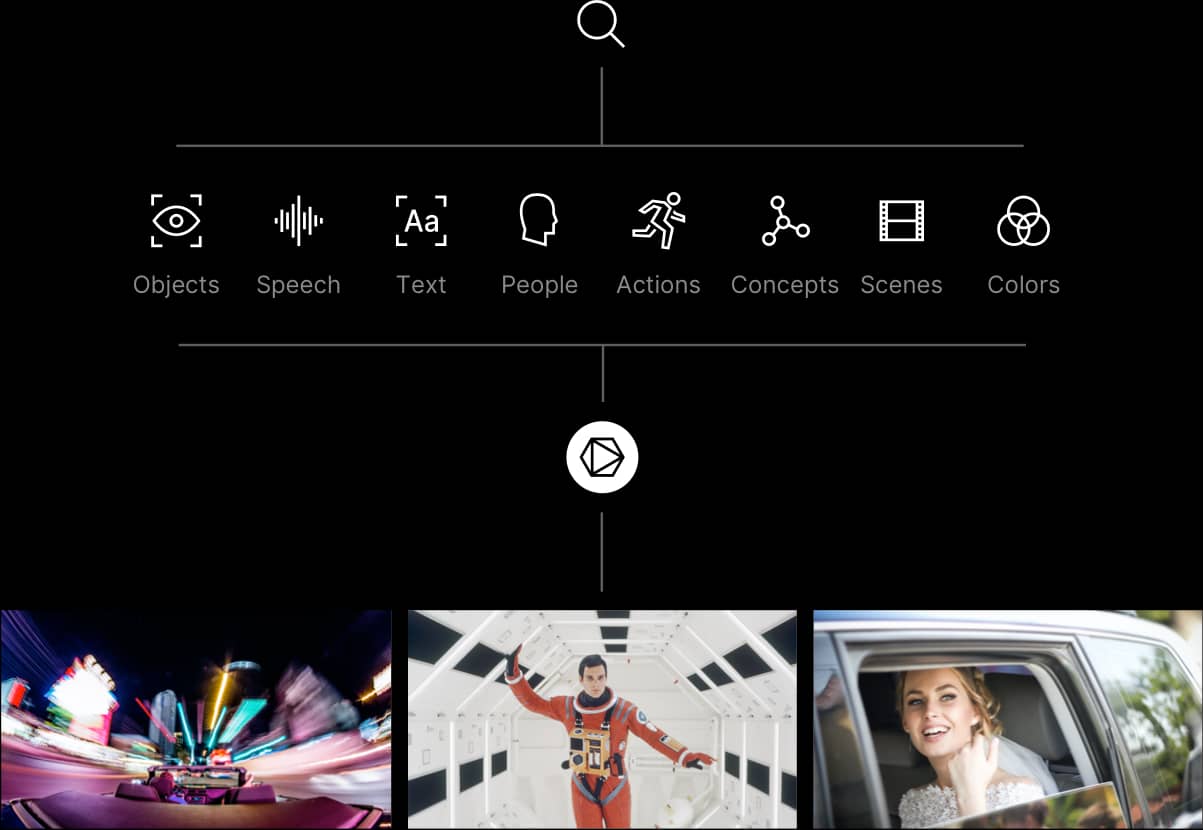

With this in mind, our advanced artificial intelligence was created to see and hear video the same way humans do. When you upload a video into our system, it is automatically analyzed and indexed on several dimensions, turning previously invisible elements into data you can search and interact with. Speech, faces, objects, sounds, actions, text and concepts are just a few of the dimensions muse.ai search operates in.

Empowered by this new search, anyone can describe a scene as they would to a friend. For example, you can click on earth, marble, or sun to search for them in the video below, combine them as you want, or find anything else in it.

Try searching for anything inside the video, and combine words to get more precise results.

Videos and video collections that span years or even decades in playtime can now be navigated and searched in an instant. These videos and collections can be embedded on any website.

Wasting time skipping back-and-forth in videos in search of the moment you’re looking for is a thing of the past, and the future has finally arrived.

See it in action! Try searching among hours of videos with the search-bar below.